Four Approaches to LLMs for Enterprises, One Key: Your Data

Data is the Key to LLMs for Enterprises

Generative AI is a lot like the discovery of gold. At a time when the market has been calling for greater scrutiny of expenses, praising margins over growth at any cost, we have witnessed an unexpected outlier.

Generative AI has not only become a topic on everyone’s lips (and fingers) for consumers — thank you, ChatGPT and MidJourney — but it has also become a prevalent subject in corporate discussions. Executive teams are opening their wallets to explore this technology, become early adopters and hopefully gain a competitive edge.

We recently discussed the opportunities and challenges associated with AI/Generative AI within the context of customer experiences (CX) today, along with ActionIQ’s initial research to prepare the next generation of audience segmentation interfaces for business teams, along with providing context and results.

It’s time for us to explore how to turn these dreams of improved efficiency and increased revenue through the use of generative AI into a reality. However, this reality will vary for each enterprise organization.

The speed and cost of extracting value from generative AI in enterprise environments will depend on the maturity of data teams, data practices, and of course, the data itself.

There is a good reason for this: if data serves as the fuel for your CX activities, the same principle applies to generative AI technology ecosystems. Data is the key to Large Language Models (LLMs).

Four Approaches to LLMs for Enterprises

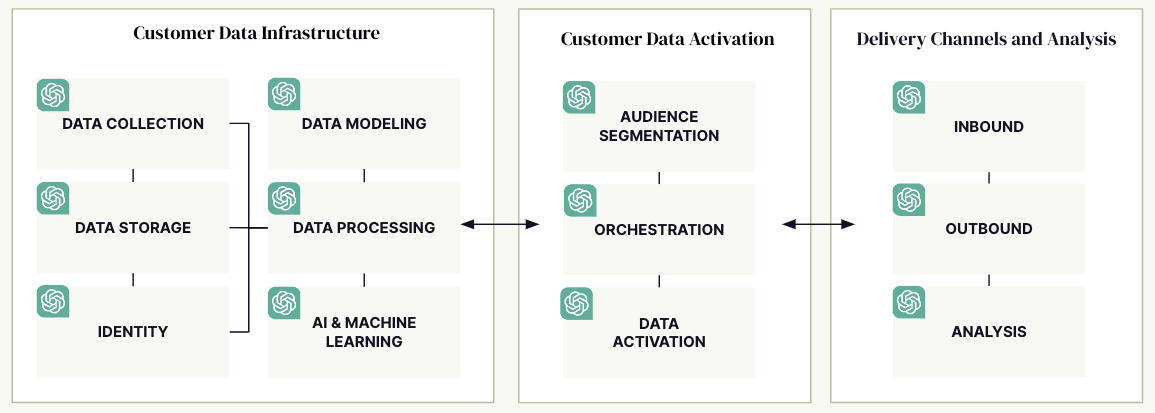

As I recently mentioned on LinkedIn, the marketing data stack proposed by tech vendors closely resembles existing technology, with the ChatGPT API plugged directly into it.

There is a valid reason for this: ChatGPT offers a low barrier to entry, low-cost, and easy-to-test option for both brands and tech vendors looking to explore Generative AI.

When evaluating Generative AI solutions and LLMs for marketing customer data stacks, I would suggest to explore the following approaches:

| Approach | Description | Differentiated Company Asset | Investment1 |

|---|---|---|---|

| Third-Party LLMs | LLMs built and trained by external entities, utilizing open web data. OpenAI is an example. | ❌ No | Low |

| Third-Party LLMs with RAG2 | Third-Party LLMs with the integration of additional data from the organization. | ✅ Yes | Medium |

| Fine-Tuned LLMs | LLMs created and trained by external entities but fine-tuned using the organization’s data | ✅ Yes | High |

| In-House LLMs | LLMs developed and trained within the organization, utilizing its own data and teams. MosaicML, which was acquired by Databricks, offers solutions following this approach. | ✅ Yes | Very High |

2 Retrieval-Augmented Generation (RAG) is the process of grounding a model with knowledge (e.g. data) improving the context relevance and reducing hallucinations.

Third-Party LLMs

Leveraging a model already built and trained that you can find with OpenAI, Cohere, or in the Hugging Face community, is going to be the lowest-cost option to get things started.

However, organizations should keep in mind that these pre-trained Third-Party LLM options are going to be commoditized in the future, very much like third-party data sets are for advertising use cases. Third-Party LLMs are and will remain valuable, but certainly not a differentiated asset for your organization in the long term.

Leveraging a model that has already been built and trained, which you can find through OpenAI or within the Hugging Face community, is the most cost-effective way to start. However, organizations should keep in mind that these pre-trained Third-Party LLM options will likely become commoditized in the future, similar to third-party datasets used in advertising. Third-party LLMs will continue to hold value, but be a differentiated asset for your organization in the long run.

Third-Party LLMs with RAG

Things start to get interesting when you complete a pre-built and pre-trained LLM with your own data. This process is known as Retrieval-Augmented Generation (RAG). For instance, if you’re a retailer receiving questions about orders, you can provide the LLM with your transaction data (including purchases and returns).

While this may sound straightforward since the LLM itself remains unchanged, the enhancements from using Third-Party LLMs without context can be significant.

Third-Party LLM providers are aware of the need for enterprise use cases and are taking action to support these requirements. Take Cohere, for example, which recently announced the addition of RAG capabilities to their Command model.

Fine-Tuned LLMs

Taking it a step further involves the use of a fine-tuning process. This process enables your LLM to develop a deeper understanding of the intricacies of your business and data, ultimately resulting in significantly enhanced performance. Consequently, this amplifies the value of harnessing generative AI capabilities.

However, it’s important to note that fine-tuning an LLM, while enticing, can prove to be a complex and costly endeavor, especially when compared to the Third-Party LLMs with RAG option. Companies like Snorkel AI can help navigate the complexities with their expertise in LLM fine-tuning.

In-House LLMs

Building your own LLM in-house, using your own teams, knowledge and data, has the potential to yield the best results, like the build of any in-house technology. However, it’s essential to note that success is not guaranteed. Not all enterprises and teams have the financial resources, time, and expertise needed to develop the technology in-house. Justifying the return on investment (ROI) for such an endeavor can be very challenging.

Considering the scarcity of expertise in LLMs and generative AI today, with many tech organizations competing for talent, this option may remain out of reach for most brands.

Evaluating the LLMs for Enterprises Approaches With Your Data

Setting cost aside for a moment, which of these LLM approaches could truly benefit your enterprise and data? By “benefit” I mean providing significant value that would justify eventually rolling out generative AI capabilities into your organization.

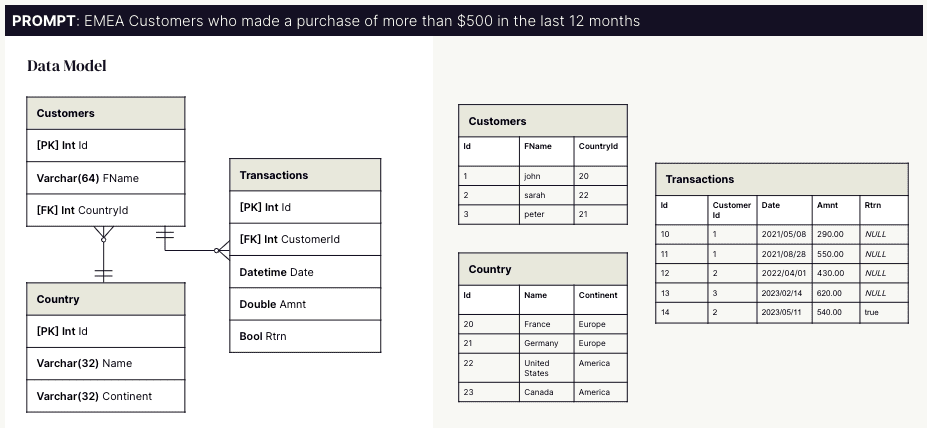

We will assess these options against three different scenarios for a single use case. We would like to access an audience segment by providing a natural language prompt, as follows:

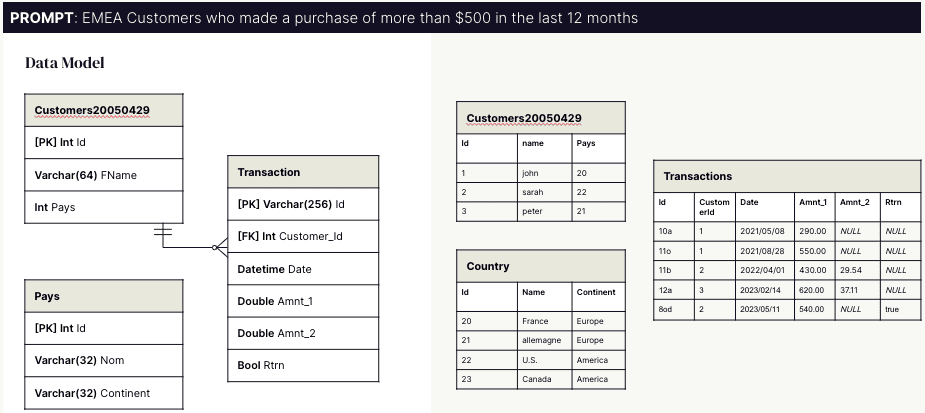

EMEA Customers who made a purchase of more than $500 in the last 12 months.

As a human reader, our brain can easily break down the components of this request:

- EMEA: A region where customers are likely located

- Customer: The target audience

- Purchase: A specific action, namely the purchase of a product or service

- More than $500: The purchase amount

- In the last 12 months: The purchase time frame in relation to the current date

For a marketer or someone familiar with today’s no-code marketing technology, building this audience would typically involve the following steps:

- If not set by default, select the Customer entity, which represents the audience to build

- Apply a filter for customers located in the “EMEA” Region

- Select Purchase table via an available link

- Apply a first filter based on the Amount attribute with an operator “greater than” and a value of “500”

- Apply a second filter based on the Transaction Date attribute with an operator “before” and a value of “1 year”

However, it’s important to note that these specific filters are highly dependent on the underlying data and data model. Business users and marketing operations teams must familiarize themselves with the data and data structure to accurately translate their idea into an actionable “query” to execute against the data storage system.

This is precisely the task you’d expect your generative AI technology to accomplish: translating the natural language prompt (idea) into a query that can be executed on the underlying data layer.

Before we jump into the evaluation, here are a few additional decisions made to keep the blog easy to read:

- The prompt has intentionally been kept simple to avoid ambiguity in its interpretation.

- The data schemas and data provided for illustration are (extremely) simplified.

- Metadata and other factors influencing LLM performance will be addressed at a later time. It does not reduce their importance in the success of generative AI use cases.

Buckle up, let’s explore the three scenarios and identify the one that suits your organization best.

Scenario 1. The Perfection, AKA The Demo

A clean data structure, along with clean data. A dream. Even if you read this without technical expertise, it should take you a split of a second to identify the data necessary to answer our prompt: Table names are matching the prompt request, so do the columns. The data itself is completely clean.

LLM Expected Performance

| LLM Approach | Expected Performance |

|---|---|

| Third-Party LLM | ✅ |

| Third-Party LLM with RAG | ✅ |

| Fine-Tuned LLM | ✅ |

| In-House LLM | ✅ |

What LLMs Approach in This Scenario?

Using a Third-Party LLM should yield great performance. This is precisely why current generative AI demos with ChatGPT are so successful! The prompt and data are a “perfect match.”

However, if you can’t quite identify your data to this category, don’t worry; you’re not alone. I have yet to encounter any enterprise organization that has managed to attain and sustain perfection in their data. And that’s perfectly fine — much can still be achieved without perfection. Just bear in mind that software vendors often opt for this scenario to showcase impressive demos.

Scenario 2 – The Standard, AKA the Reality

Thanks to significant investments made by the IT and data teams, the enterprise’s data is in good to great shape. The illustration above serves as just one example of a scenario in which the actual state can vary widely among organizations.

In this scenario, some tables may not have the exact names that align with the terminology used by the business teams today. For instance, some tables might refer to “profiles” or “users,” while others may refer to “customers.” Furthermore, the concept of a “purchase” was labeled as a “transaction” by your data team a decade ago.

| LLM Approach | Expected Performance |

|---|---|

| Third-Party LLM | ↘️ |

| Third-Party LLM with RAG | ✅ |

| Fine-Tuned LLM | ✅ |

| In-House LLM | ✅ |

What LLMs Approach in This Scenario?

In this case, grounding an LLM for your enterprise with additional context offers a higher likelihood of achieving optimal results. Alternatively, the terminology nuances and specificities unique to each organization that can be captured by the LLM through fine-tuning.

Many experts at the moment agree that Third-Party LLM with RAG will offer a better ratio cost/performance than Fine-Tined LLM, but we are too early to prove that hypothesis.

Scenario 3 – The Hell, AKA the Chaos

Picture a world where the data team consists of interns entering the professional world, being switched every few months. All without the supervision from a senior personnel responsible for the organization’s data.

The purpose here is to depict a scenario where there is minimal to no data structure or defined data processes.

This chaos has been made even easier to accomplish with the advent of Data Lakes. Some organizations inadvertently created what’s often referred to as a “Data Swamp.”

It’s important to note that my illustration of this scenario remains somewhat simplified for the sake of this blog post — it’s quite challenging to illustrate a swamp with little data.

| LLM Approach | Expected Performance |

|---|---|

| Third-Party LLM | ↘️ |

| Third-Party LLM with RAG | ❓ |

| Fine-Tuned LLM | ↘️ |

| In-House LLM | ✅ |

What LLMs Approach in This Scenario?

In this case, both Third-Party and Fine-Tuned options are going to fall short. Be prepared to find hallucinations left and right. If your data expert struggles to locate the data you need, your Third-Party Model will face similar challenges. If you can provide the right context to your LLM — because you know what data, tables to use, or you created an accessible semantic layer on top of your messy data — chances are you could achieve somewhat acceptable results using a Third-Party LLM with RAG.

In general, the high level of data complexity and specificity in your situation will demand that your team roll up their sleeves, get their hands dirty to achieve acceptable performance with generative AI. It could be that the most suitable route is the In-House LLM for your enterprise.

However, I’d also strongly recommend addressing your data challenges first.

If your situation mirrors the chaos I’ve described, it’s quite challenging to envision your team successfully building an in-house, high-performing generative AI stack — a new and complex technology.

Strategy When Selecting LLMs for Enterprises

Selecting a Strategy for Yourself

If you’ve decided to invest in generative AI within your teams, starting with testing Third-Party LLMs such as ChatGPT is an excellent way to familiarize yourself with this new technology. Beyond that, the most immediate return on investment is likely to integrate context to the Third-Party LLM or from the use of fine-tuning, which leverages the specifics of your business and data. It’s important to note that the In-House approach may remain beyond the reach of most brands.

I’m not suggesting that among the four options described above — Third-Party LLM, Third-Party LLM with RAG, Fine-Tuned LLM, and In-House LLM — only one will be adopted within an organization or product. There are numerous use cases and situations that will determine the adoption of one over the other, and in some cases, organizations may even switch between options over time for a given use case.

Another point to keep in mind is that your data structure and the data itself are only two aspects influencing the performance of your models. We will discuss in the future additional components such as a semantic layer on top of the data.

| Third-Party LLM | Great for demos. |

| Third-Party LLM with RAG | Likely the most accessible and susceptible of delivering satisfactory results for the time being. |

| Fine-Tuned LLM | A great option to evaluate against Third-Party with RAG option, if investments to fine-tune an LLM is possible. |

| In-House LLM | Often too complex and expensive for most enterprise brands. |

Selecting a Vendor with Generative AI Capabilities

You’ve made the decision to choose a vendor for your marketing stack, with “generative AI capabilities” as a critical selection criterion.

Selecting technology can be a complex task, and making the right decisions in a new and noisy environment is even more challenging.

The journey with Generative AI is a long one, and while there are plenty of reasons to be excited about the opportunities ahead, buyers should also be aware that demos often showcase a vision built on a level of perfection not relatable to the complex enterprise world.

Getting Ready for a Long Ride With LLMs for Enterprises

The performance of your generative AI use cases and a natural language interface will only be as good as the data used. By no means I would suggest that perfect data is necessary to begin with generative AI. However, it’s essential not to spend all your time and resources on the AI aspect without getting your data in order in parallel.

Generative AI is a journey, and at ActionIQ, we are eager to continue working closely with our partners and clients to embrace change and enhance revenue and efficiency through customer data technology for your teams.

Interested in learning more? Don’t hesitate to reach out to our experts.